In the dynamic world of artificial intelligence (AI), criticism often targets Large Language Models (LLMs) for being “stupid,” labeled as simple word predictors. This critique somewhat holds when considering LLMs on a zero-shot level, where they generate responses based solely on the immediate prompt. However, this perception overlooks the broader potential of these models. AI, as I envision it, involves harnessing LLMs not just as generative models but evolving them into systems that can think and learn like humans. This could eventually lead us toward achieving higher intelligence, similar to the evolutionary advancements of the human brain.

Recent revelations from OpenAI highlight a significant leap in this direction. Their much-anticipated “Strawberry” model, now officially known as GPT-o1, has demonstrated capabilities that rival PhD-level experts in fields like math, coding, and physics. This model distinguishes itself by employing a hidden “Chain of Thought,” allowing it to reason internally before delivering an answer. This ability to think deeply mirrors human cognition and lays the groundwork for AI systems that not only predict words but engage in complex reasoning, pushing the boundaries of traditional AI towards something resembling genuine intelligence.

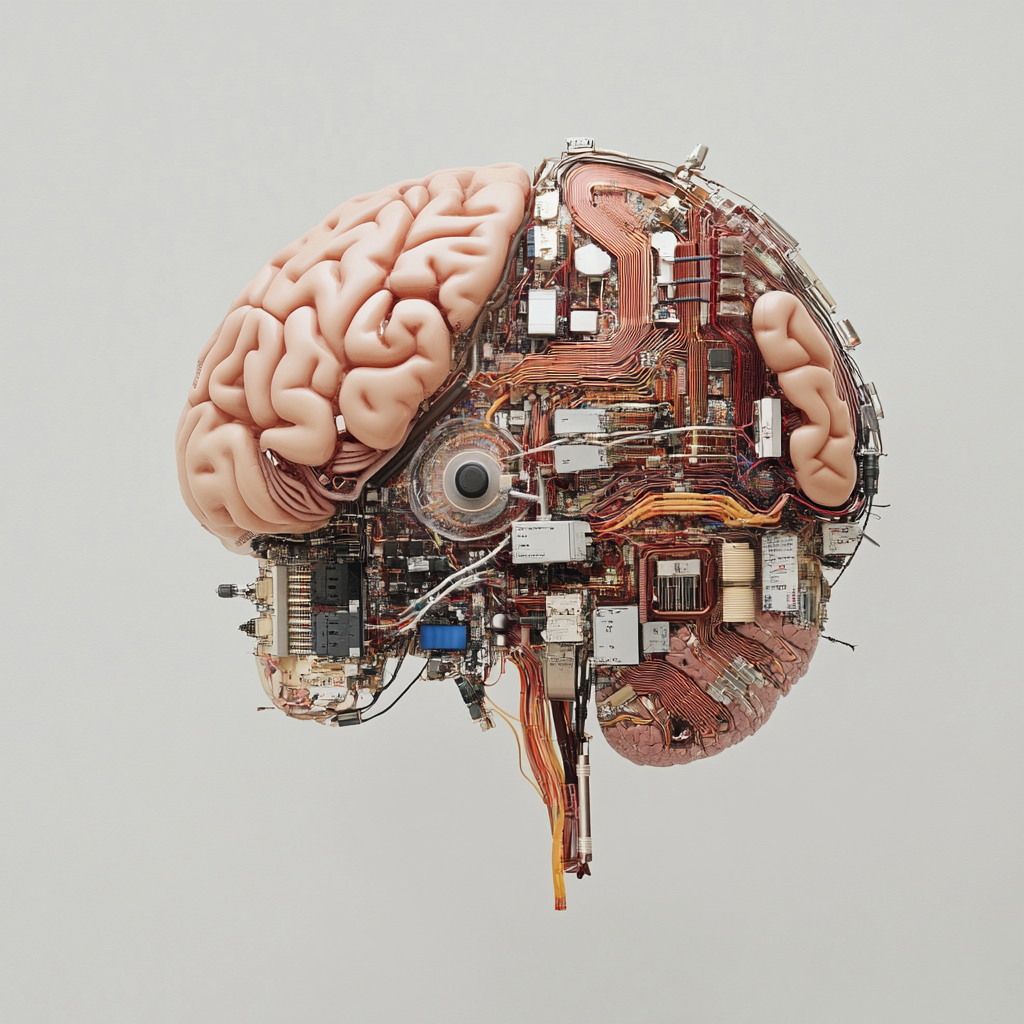

The Human Brain as a Model for AI

The complexity of the human brain, with its specialized regions dedicated to tasks like language processing and decision-making, provides an insightful template for developing AI. Each area of our brain contributes uniquely to our cognitive processes, from Broca’s area handling language to the prefrontal cortex managing decision-making. This compartmentalization allows for efficient processing and integration of information, a model I believe AI should emulate.

By structuring AI systems with modular components for tasks such as language and memory, similar to the brain’s architecture, we can create machines that process information with precision and depth. This modularity ensures that each part of the AI functions optimally within its domain, just as specific brain regions excel in their respective roles. Using parallels like synaptic connections to model weights and embeddings, AI can store and retrieve information in a way that reflects human learning and adaptation processes.

Large Language Models (LLMs) as the Language Centre of AI

LLMs, such as OpenAI’s GPT-o1, are paving the way for AI to handle language much like the human brain. These models are designed to understand and generate language with the complexity and nuance found in human communication. GPT-o1, in particular, utilizes a hidden “Chain of Thought,” simulating a process similar to human reasoning. It doesn’t just predict the next word—it evaluates possibilities, reflects on past information, and arrives at well-considered conclusions.

This model’s internal reasoning process allows it to solve complex problems and perform at levels comparable to human experts. It ranks high in competitive programming and mathematical problem-solving, showcasing its ability to think critically. By implementing models that think before answering, we move closer to AI systems that can function with the sophistication of the human mind, breaking the mold of conventional LLMs and setting a new standard for what AI can achieve.

Memory Management: Short-Term vs. Long-Term Memory in AI

The human brain excels at distinguishing between short-term and long-term memories, a crucial skill for cognitive efficiency. AI systems can greatly benefit from adopting similar memory management strategies. By integrating mechanisms that parallel how the brain processes and stores information, AI can balance quick data retrieval with deep storage capabilities.

OpenAI’s GPT-o1 advances in this area, offering improved memory handling and decision-making processes. The model can autonomously navigate complex scenarios, maintaining a balance between immediate response and thoughtful reasoning. This mirrors the human brain’s ability to decide what information to store for the long term while keeping other data readily accessible for short-term use, enhancing the AI’s contextually aware responses.

The Brain’s External Knowledge Access: Browsing and Learning

Humans constantly learn from the world around them, updating their knowledge by reading, listening, and observing. I propose that AI should be equipped with similar tools for external knowledge acquisition, allowing for more informed and timely responses. By enabling AI systems to access real-time data through browsers or APIs, we enhance their capacity to understand and respond effectively.

OpenAI’s approach with GPT-o1 includes such capabilities, allowing the model to fetch and integrate information from diverse sources. This mirrors our own intellectual curiosity, creating AI systems that don’t just process information but continuously expand their understanding. This integration fosters a seamless relationship between AI and daily human activities, transforming AI into an invaluable partner in learning and discovery.

Simulating Human Thought Processes: Tree of Thought (ToT) and Agentic Systems

To advance AI, it’s crucial to simulate human thought processes using frameworks like Tree of Thought (ToT) and agentic systems. ToT, for example, enables AI to evaluate multiple potential outcomes before concluding, much like human decision-making. This approach facilitates nuanced and informed decisions, enhancing AI’s reasoning capabilities.

Agentic systems further bolster AI by dividing tasks among dedicated agents, each responsible for a specific cognitive function. This setup mirrors our brain’s compartmentalized approach, where different regions manage tasks such as memory and decision-making. By combining ToT and agentic systems, AI can produce outputs that closely align with human thought, making interactions more intuitive and human-like.

My final toughts

In summary, the evolution of AI involves drawing inspiration from the intricate systems of the human brain. By adopting a brain-inspired structure, AI agents can enhance their processing and generation of information to align with human understanding. GPT-o1 exemplifies this approach, serving as a linguistic core while utilizing advanced memory management and reasoning capabilities. The integration of thought frameworks like ToT and agentic systems positions AI for a future where it can think, learn, and interact in human-like ways.

As we move forward, exploring brain-inspired architectures will redefine AI’s role in society. This evolution invites developers and researchers to innovate relentlessly, pushing the boundaries of what’s possible with AI and redefining our relationship with technology.